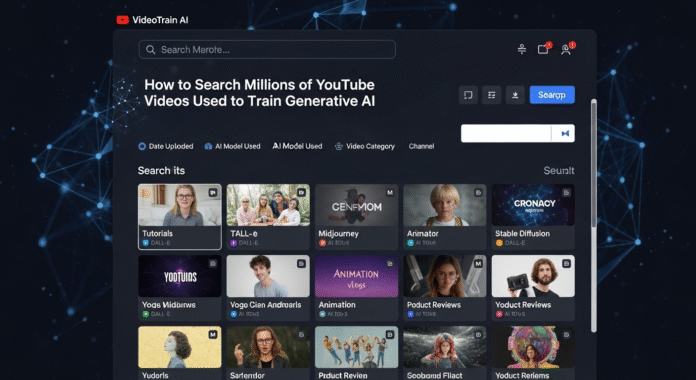

Generative AI has taken the world by storm, and YouTube videos have played a huge part in training these models. From voice synthesis to video captioning, AI systems have learned from millions of hours of video content. But here’s the tricky part: how do you search through those millions of YouTube videos that may have trained these models?

It sounds like searching for a needle in a digital haystack, right? The good news is that it’s absolutely possible if you know where to look, what tools to use, and how to interpret what you find. This blog will walk you through practical, legal, and effective ways to explore the massive sea of YouTube training data behind generative AI models.

You don’t need to be a data scientist to understand this. If you can navigate YouTube and Google, you can follow along. Let’s dive in.

Understanding Why YouTube Videos Are Used in AI Training

Before you start searching, it’s important to understand why YouTube videos are such popular training material for generative AI.

YouTube is the largest video platform in the world, with billions of hours of diverse content. From educational lectures to music videos, this variety gives AI models exposure to different voices, visuals, languages, and cultural contexts. Training on such data helps them recognize patterns in speech, text, and images more effectively.

Moreover, videos offer multimodal data they include visuals, audio, and text (captions). This is crucial for training advanced generative AI models that handle tasks like automatic video captioning, image generation from audio, or translating video content. By understanding this, you can better see why AI researchers often target YouTube data and how that shapes what you should search for.

Legal and Ethical Considerations Before Searching

Before you go hunting, pause for a second: not every YouTube video is fair game for AI training.

Most YouTube videos are copyrighted, which means scraping and using them without permission can break copyright laws. AI companies typically partner with content owners, use videos with Creative Commons licenses, or only analyze metadata and transcripts for research.

When you search for YouTube videos used to train AI, you’re looking for publicly available metadata or datasets, not trying to download and reuse copyrighted videos yourself. Staying ethical keeps you out of legal trouble and helps support the creators who make the content in the first place.

So, as you search, focus on Creative Commons-licensed videos, open datasets, and research papers that reference video sources. This approach ensures you can explore AI training data safely and responsibly.

Using YouTube’s Advanced Search Tools

The simplest place to start is right on YouTube itself. While YouTube doesn’t give you a list of “videos used in AI training,” it does let you filter and search for videos that are likely included in open datasets.

Use these tips:

- Search by license: In YouTube’s advanced search, filter for Creative Commons videos. These are legally reusable and often used in AI training datasets.

- Search by keywords: Try keywords like “dataset,” “open data,” “AI training,” or “CC licensed tutorial.”

- Filter by length: Long-form content (20+ minutes) is more likely to be used for training than short clips.

Once you find relevant videos, you can collect their metadata titles, descriptions, tags, transcripts to analyze how they might fit into AI datasets. While it’s not as fast as using a dataset, this method gives you direct, current access to live videos on YouTube.

Leveraging YouTube Transcript Extraction Tools

Another powerful trick is using transcript extraction tools. Many AI models don’t train on raw video they train on text from video captions or transcripts.

Tools like YouTube Transcript API, yt-dlp, or online caption scrapers can help you pull transcripts from public videos. Once you extract transcripts, you can run searches for keywords, topics, or even stylistic patterns used by AI systems.

This is useful because it mirrors how AI models analyze content. If you know the kind of text AI systems train on, you can track down which types of videos they likely learned from. This gives you a clearer picture of how content flows into AI systems.

Remember, only extract transcripts from public videos, and don’t redistribute copyrighted text. Use it for research and analysis only.

Exploring Public Datasets That Include YouTube Videos

Here’s where things get really interesting. Many research groups have published massive datasets of YouTube videos used to train AI models.

Some well-known ones include:

- YouTube-8M – A dataset of millions of YouTube video IDs with associated labels.

- AudioSet – Over 2 million human-labeled 10-second audio clips from YouTube videos.

- Kinetics – A large-scale dataset of human action videos from YouTube.

- HowTo100M – Instructional YouTube videos with subtitles, used for multimodal learning.

You can search these datasets by keywords, categories, or video IDs, and then locate the actual videos on YouTube. This is by far the most direct way to find videos used in generative AI training.

Even if some of the videos have been removed, their metadata and labels are often still available for analysis.

Using Research Papers and AI Model Documentation

AI companies don’t usually hand over their training data lists, but they often mention their data sources in research papers or model cards.

For example, Google’s research papers on video understanding models or OpenAI’s multimodal models sometimes reference datasets like YouTube-8M or HowTo100M. By reading these papers, you can see exactly which collections of YouTube videos were used or at least referenced during training.

Use Google Scholar or arXiv to search for terms like:

- “trained on YouTube videos”

- “video dataset”

- “multimodal model”

- “video captioning dataset”

Each paper’s references section can lead you down a rabbit hole of related datasets and collections. Think of it as detective work for data nerds you’re following the breadcrumbs researchers leave behind.

Building a Searchable Database of Video Metadata

Once you’ve gathered video IDs from YouTube searches, datasets, or papers, you can build a simple database to organize and search them.

Use tools like Google Sheets, Notion, or even a lightweight database (SQLite) to store:

- Video IDs and links

- Titles and descriptions

- Tags and categories

- Captions or transcripts

- License type (CC or standard YouTube license)

This database becomes your personal “AI training video index.” You can filter by keyword, sort by duration, or tag videos by their likely dataset. Over time, it becomes much easier to pinpoint patterns and trace how AI models may have been influenced by certain kinds of content.

This approach also mirrors how mobile app development team build datasets for AI-powered apps. They often collect and label media content before feeding it into machine learning pipelines, so learning to organize metadata will give you a skill that’s highly useful beyond just searching.

Automating the Process With APIs and Scripting

If you want to scale up your search, manual browsing won’t cut it. That’s when automation tools come in handy.

The YouTube Data API lets you search videos, fetch metadata, and store it in bulk. You can write simple Python scripts to query by keywords, filter by license, and collect thousands of video IDs in minutes.

You can also combine this with transcript extraction APIs and natural language processing (NLP) tools to analyze the text content. This helps you find which videos match the topics, language style, or visuals AI models might use during training.

Automation saves time, especially if you plan to analyze millions of videos. Just make sure you follow YouTube’s API usage limits and terms of service, and never download videos without permission.

Future Tools That May Make This Easier

The process of tracking training data is still evolving. Soon, we may see tools that directly label which videos have been used to train specific AI models, especially as transparency rules grow.

Regulators in the EU and US are pushing for content provenance laws that could require companies to disclose training sources. If this happens, platforms like YouTube might add metadata flags or public datasets showing which videos contributed to AI training.

Until then, combining search techniques, datasets, research papers, and APIs is your best bet. It’s not a one-click solution, but it works—and it builds your skills in handling large-scale media data, which is becoming increasingly valuable in fields like mobile app development, digital media, and AI product design.

Conclusion

Searching millions of YouTube videos used in generative AI training might sound overwhelming at first. But with the right strategy focusing on open datasets, transcripts, metadata, research papers, and automation you can piece together a clear picture of how these models learn from video content.

You don’t need a supercomputer or an AI lab badge. You just need curiosity, patience, and a good method. As AI becomes more integrated into mobile app development, education, entertainment, and business, knowing how to trace its data sources will be a valuable edge.

So next time you scroll through YouTube, remember: that random tutorial or cat video might just be secretly teaching an AI how to think.

Read More: Ideal Magazine